Going to newer architecture is unevitable evolution. ARM series is particularly good choice as knowledge of its core functionality is to large extent portable across manufacturers. While ARM Cortex-M chips are close to 8-bit AVRs in footprint size and price tag, they give more processing power and peripherals. More complex architecture and ultra-small packaging is often counter-argument on hobbyists forums. Does it really matter? Here is my subjective comparison of these vast families.

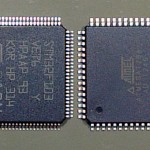

My comparison is a little biased. While ARM M0-series is targetted as 8-bit competition I have chosen mainstream M3-series as I find it best candidate for next projects. For comparison I took chip I was working intensively for last year: AVR Atmega128 that has 64 pins TQFP 14x14mm, 128kB flash, 4kB RAM + extram, with 8Mhz clock at 3.3V. I matched it with STM32F103RB with closest fundamental physical features: Cortex-M3 from ST in 64 pins LQFP 10x10mm, 128kB flash, 20kB RAM, running with 8-72MHz clock.

Disadvantages?

Let see the bad points first. I will not argue with architecture or datasheets; some people see ARM more complex than 8-bit AVR, I do not. Every platform has plenty of tutorials and samples, and it can start from very simple yet working programs. Most complaints from hobbyists is focused on packaging of M3 series; while most miniaturized AVRs (and ARM Cortex-M0) come in thru-hole DIP or easy to handle SMT packages like TQFP then ARMs start with LQFP and go towards packages barely manageable at home (BGA or even WLCSP). Comparing TQFP and LQFP is obvious: the latter used by ARM has twice higher pin density. It poses couple problems: pins deform/bent easily which barely happens to TQFP, LQFP requires quite good soldering skills and finally creating PCB at home (using thermo-transfer process) with pads is very difficult.

My reaction to these arguments was just a shoulder shrug: I was alredy soldering a lot of generic chips with same or similar density (TSSOPs and MSOPs in WLS project) and even started SMT soldering with LQFP rework. PCB manufacture at home for LQFP is painful yet possible. For some time already I prefer prototyping with breakouts adapting SMT to DIP pinout and wiring them on breadboards. In cases where some circuitry requires strict tracing (like DC/DC converters) I was able to create such tiny pads even at home. So I neglect hobby-grade counter-arguments. Let see the benefits.

Performance

What is immediately visible is clock range. While most atmegas run up to 16-20MHz, Cortex-M3 can runs couple times faster using configurable PLL frequency multiplier. What is not visible is real processing power. From benchmarks STM32F103RB got on average 3.5x higher score per MHz than Atmega128’s cousin Atmega2560. It means that STM32 can be simply order of magnitude faster than Atmega.

Real performance boost comes from wider bus and wider set of opcodes. Classic issue in AVR worlds is using division operation on wide integers; both consuming a extra flash and lot of computing cycles. Let’s compare both platforms with that function:

uint32_t divide(uint32_t a, uint32_t b) {

return a / b;

}

In AVR compiler generates helper functions (reused in subsequent division of course) that take 68 bytes plus 10 bytes of divide function:

MAP file:

.text 0x00013f24 0x10a ./main.o

0x00013f9e vApplicationStackOverflowHook

0x00013fce main

0x00013f24 divide

0x00013f2e initGpiosReducePower

LSS file:

00013f24 : 13f24: 0e 94 69 a0 call 0x140d2 ; 0x140d2 <__udivmodsi4> 13f28: ca 01 movw r24, r20 13f2a: b9 01 movw r22, r18 13f2c: 08 95 ret ... 000140d2 <__udivmodsi4>: 140d2: a1 e2 ldi r26, 0x21 ; 33 140d4: 1a 2e mov r1, r26 140d6: aa 1b sub r26, r26 140d8: bb 1b sub r27, r27 140da: fd 01 movw r30, r26 140dc: 0d c0 rjmp .+26 ; 0x140f8 <__udivmodsi4_ep> 000140de <__udivmodsi4_loop>: 140de: aa 1f adc r26, r26 140e0: bb 1f adc r27, r27 140e2: ee 1f adc r30, r30 140e4: ff 1f adc r31, r31 140e6: a2 17 cp r26, r18 140e8: b3 07 cpc r27, r19 140ea: e4 07 cpc r30, r20 140ec: f5 07 cpc r31, r21 140ee: 20 f0 brcs .+8 ; 0x140f8 <__udivmodsi4_ep> 140f0: a2 1b sub r26, r18 140f2: b3 0b sbc r27, r19 140f4: e4 0b sbc r30, r20 140f6: f5 0b sbc r31, r21 000140f8 <__udivmodsi4_ep>: 140f8: 66 1f adc r22, r22 140fa: 77 1f adc r23, r23 140fc: 88 1f adc r24, r24 140fe: 99 1f adc r25, r25 14100: 1a 94 dec r1 14102: 69 f7 brne .-38 ; 0x140de <__udivmodsi4_loop> 14104: 60 95 com r22 14106: 70 95 com r23 14108: 80 95 com r24 1410a: 90 95 com r25 1410c: 9b 01 movw r18, r22 1410e: ac 01 movw r20, r24 14110: bd 01 movw r22, r26 14112: cf 01 movw r24, r30 14114: 08 95 ret

In ARM it takes 32 bytes of flash in total, most of it taking care of stack and registers before calling “udiv” instruction. In real example where function is handling more logic than simple division it would lead to even more effective code packing:

MAP file:

.text.divide 0x00000000080002f4 0x20 out/main.o

0x00000000080002f4 divide

LSS file:

uint32_t divide(uint32_t a, uint32_t b) {

80002f4: b480 push {r7}

80002f6: b083 sub sp, #12

80002f8: af00 add r7, sp, #0

80002fa: 6078 str r0, [r7, #4]

80002fc: 6039 str r1, [r7, #0]

return a / b;

80002fe: 687a ldr r2, [r7, #4]

8000300: 683b ldr r3, [r7, #0]

8000302: fbb2 f3f3 udiv r3, r2, r3

}

8000306: 4618 mov r0, r3

8000308: 370c adds r7, #12

800030a: 46bd mov sp, r7

800030c: f85d 7b04 ldr.w r7, [sp], #4

8000310: 4770 bx lr

8000312: bf00 nop

Division in AVR will take 693 cycles of CPU while ARM needs 17-27 cycles depending on arguments. Combining faster instruction processing and smaller number of cycles, math intense application can run 100x faster on ARM.

Power consumption

What about power consumption? Faster processing means more current, right? No. Using same 3.3V power supply, in full run mode at 8MHz and all peripherals on Atmega128 needs around 6mA while STM32F103RB consumes 8.6mA – just a little more than 40% or current, quite impressive efficiency. Standby mode it is even more impressive: still all peripherals enabled, Atmega clocked with external 32kHz crystal takes roughly 15uA, STM32F103RB with 8MHz clock just 3.4uA!

Peripherals

In terms of peripherals both chips are quite similar, STM32F103RB has 50% more timers and USARTs, and twice more SPI and I2C drivers. While atmega can drive external memory, STM32 has DMA and flexible static memory controller. What is disappointing this specic Cortex-M3 chip does not have EEPROM at all – it must either use external chip or simulate EEPROM in its flash (as Cortex-M3 can write to flash area from regular program). A little more expensive M3s do have EEPROM though.

Pricing

Next, let’s take a look at retail pricing. Bying from china small batch (10 pieces) of Atmega128 we need to pay roughly $1.2 each, and for STM32F103RB it is around $2.5. Even the price was multiplied I would not hesitate to pay for what is offerred.

Debugging test

My only investment into ARM so far was cheap development board. First goal in my head was to address most annoying drawback of 8-bit AVRs: debugging. I have never successfully set ARV ICE mkII hardware debugger to work with Eclipse/WinAVR on Windows. Drivers collision between AVRStudio (required by debugger) and avrdude drivers was always driving (pun intended) me nuts. And libusb on 64-bit Windows was more than pain in the bottom. My goal was to create trivial LED-blinking application just to start interactive debugging session; it took me two long hair-pulling hours combining Eclipse with OpenOCD, GDB server and physical programmer-debugger, just to prove clicking “pause” in Eclipse will stop LED blinking! And it did! Fortunatelly this time frustration was fueled only by lack of new platform knowledge (toolchain totally breaking AVR habits). Quick toolchain setup on OSX got me even wider smile. Debugger assurance makes learning closer to comfort zone and the only hard job is to persist on discovering new landscape.

I agree with you. ARM uC from Freescale are of nearly same price of AVR, for similar features.

SRAM is very limited in any 8-bit uC, but not so in ARM basic uC such as cortex m0+. Means, I can write for better and complex application without worrying much about RAM/flash usage. Segger Jlink is fantastic debugging tool for ARM uC. All it needs is spending some time in working with ARM.

Just migrated to STM32 too. What I can add:

Pros:

1. Peripherals which you won’t find on AVR, such as RTC, DAC, DMA, random number generator, etc;

2. Timers. There are lots of them, not 2-3 like in case of AVR.

Also , they’re so much better and easier to use, you can have any prescaler you want and and any count number, not the fixed AVR bullshit. Also, timers here have lots of cool features, such as dead time generation, hardware encoder driver, etc. And there are lots of outputs, like 4 PWMs per timer is a regular thing;

3. SWD and hardware debugging. Yup, you can do it here without a problem.

On AVR I used software breakpoints and UART, which kinda worked, but hardware debugger is so much better;

Cons:

1. It was VERY hard to migrate from AVRs. I spent around a year just hanging around with thoughts about using more advanced MCUs. Why it was that hard? Because on ARM you lose tons of stuff you take for granted on 8-bit MCUs. Delay lib for example, there is no such thing, you have to use SysTic timer, which isnt an easy thing when you don’t know a thing about your new uC. The other libraries, like HD44780 driver, you have to write your own.

No code generator. I used CodevisionAVR and for me it was a shock not to have one here.

Yeah, CubeMX and stuff, but it’s totally sucks. Also, it won’t be easy to use SPL library the first time.

2. Not a lot of tutorials on the net. But since AVR is produced since 1996 and STM32 began more or less popular only recently – that’s not surprising. Any way, it’s possible to start, which is more than enough.

So, in the end I can say that you should only migrate when you feel that 8 bits isn’t enough.

When you completely mastered your AVRs and want even more.

It won’t be easy at all, but 32-bit MCUs worth it.

Eugene, thanks for your insight. I agree with most of your observations. Migration problems are connected to things you know vs things you have to learn failing a lot… complexity adds to it, I think though once you get “onboard” it is hard to look back. I am not yet there with ARM, my guts feeling comes from experience in other sw/hw subjects.

Regarding HD44780, you are right not having library out of the box (except if you are after mbed.org SDK and use TextLCD library). If you build new stuff you can first decide on library and then buy LCD with supported chip. I did that looking first at u8glib/ucglib and ordering LCD/OLED displays later (note that this library supports AVR as well as ARM). This way I made my graphical LCD up and running on breadboard within an hour. That “angle of attack” is based on my opinion, that these days time saving (or time-to-market if you will) is most important factor, so that choice of combined stack of hardware, system/no-system, libraries, proprietary code etc. must be optimal as a whole. True even for hobbyist when free time is limited 😉