In the late stage of hardware planning of new server I assumed software RAID1 support. Since this was my first time to play with disk array I reduced number of variables to minimum and have chosen Ubuntu Linux as server OS, distribution I know best. Overcoming installer defects I laid down the foundations, configured remote graphical access, migrated services, measured and estimated TCO of platform.

Preparing for installation I discovered limitation of regular Ubuntu server release: it does not configure RAID and LVM during installation process. I am switching to alternate install release that bundles more exotic server and desktop requirements, RAID and LVM configuration included.

Starting with latest & greatest version 12.04 I stuck on error message about invalid CD-ROM data blocking progression. I double checked download MD5 checksum and it was completely fine. Assuming faulty build I decided to give a try to older 11.10 release, to my surprise having issues on bootstrapping stage. That problem was quickly googled out, and replacing unetbootin pendrive flashing software with LiveLinux, I could start installer and configure options and install boot partition. Happiness did not last long, v11.10 broke down failing on package dependencies resolution somewhere in the middle of process. And yes, checksum was also OK this time.

Looking for solution I found other people complaining on broken alternate builds, the one with package dependency as well as on invalid CD-ROM report that had root cause in broken package dependencies. Reviewing several similar reports on different Ubuntu builds I noticed one that highlighted truncated package names and that prompted me to check pendrive package structure of 12.04. I found truncated packages quite quickly and manual fix allowed me to go with 11.10. Curious about “not valid CR-ROM” I reviewed content of /var/log/syslog of installer to spot problem with “xserver-xorg-video-radeon” installation. This time package name was not truncated while I had impression the name was somehow different then other. Part of video driver package name looked like truncated in the process of name creation (it has trailing part “-0ubunt_i386.deb” instead of “0ubuntu2_i386.deb” as all other packages). Renaming fixed last one problem with installer. To let other save couple hours of struggle I shared my observations with community.

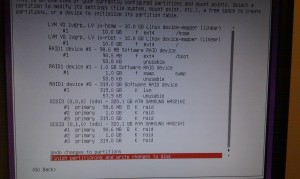

Finally I could focus on discovering RAID and LVM features. With 2 disks I have chosen following layout:

- boot partition in RAID – each disk having clone of GRUB allows to start OS from secondary disk when the primary one is damaged. I allow starting server in “degraded” RAID to be able to add new disk to the matrix or to have access to unsecured disk (from reliability perspective).

- swap in RAID – if swap is on faulty disk it automatically leads to linux crash. Mirrored swaps preserves memory consistency and in theory allows the running OS to survive disk failure.

- LVM in RAID – all other partitions managed by LVM laying on 3rd RAID device taking remaining disk space. I have seen this as most common pattern that isolates logical partitioning from RAID per se.

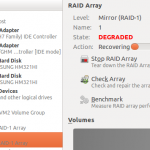

The swap on RAID works in theory only. I observed unpleasant behavior of the platform when simulating hardware failure: after one SATA cable disconnection the system frozen for some time and then kernel crashed with NULL pointer dereference.

By default system halts and to force reboot after kernel panic I had to set kernel.panic=1 in sysctl.conf to automatically reboot (I do not have display connected to the server so there is no point to hold on crash screen). Once server restarts again the RAID array started recovering from abrupt SATA termination. That process took nearly 30 minutes to replicate all partitions out of sync, which is whole disk space in fact.

Software RAID has it positive site though, you can use disk of different capacity (and vendors) as long as RAID partitions can fit into. In disk failure situation there is no problem with replacement. Also if disks have different capacities, non-mirrored partitions from the bigger disk can be used as unreliable storage.

LVM gives another level of indirection with partition management as it allows to resize, split and merge logical partitions. I was curious how it behaves I decided to leave dynamic partition management opened. While older kernels make LVM inferior to RAID1, as pointed out here, modern kernels make RAID1 and LVM mirroring on par, at least I could not find any relevant arguments on using RAID1+LVM instead plain LVM in mirroring mode. Being new to the setup I have simply chosen well known pattern.

After system setup I begun preparation for remote administration. Terminal access via SSH is enough but having video card on board was teasing to setup remote access to hardware accelerated windows system. Using x11vnc server one can use any open VNC client and tunneling connection through SSH session makes unencrypted VNC protocol secured. My last concern was to secure VNC client view. I did not want to use VNC authentication (VNC separate password) but have remote and local access the same: user opens VNC session and sees Ubuntu login screen. The problem was that when connection was lost after login, next reconnection regained unlocked user screen.

I expected to see that lost connection should lock screen, the trick is that X-windows locking works on different communication layer than network connectivity. I realized that x11vnc ‘-gone‘ option could execute screen locking. So far so good, but having gnome desktop, using that construction “x11vnc […] -gone ‘gnome-screensaver-lock -l’” entered screensaver on connection lost but left screen unlocked. It took me some time to understand that gnome command has to be executed by the owner of gnome desktop manager, while x11vnc is started with root as an owner. I found my local solution and shared on local ubuntu forum.

Setting up wake-on-lan was icing on the cake. I wanted to have WOL enabled in case I remotely shot down server instead rebooting. I found it tricky again, WOL did not work, no matter how hard I tried to send magic packet from router to server. My desktop PC was having sleepless night when I was testing where the problem is. Definitely it was mini-ITX to blame. Neither this nor that trick worked with Ubuntu 12.04. Finally I found and installed different ethernet driver [LINK] that did the job.

Armed with remotely accessible server I started migration of data backups and photos that are now served order of magnitude much faster (SATA2 disk transfer over wifi, compared to USB2 external disk transfer). That was quick and dirty since samba was already pre-installed and configuration worked nearly by copying config file from router.

Migration of WordPress was also smooth. Having MySQL installed I have copied database files and configured access rights once again. With plenty of memory I gave apache2 a shot. It took me a while to configure it since before I was using lighttpd. To my surprise apache2 configuration more cumbersome comparing to lighttpd, and what is more the latter was even just barely faster. Using firebug measures, the same WordPress website (caching switched off) and the same PHP5 configuration was loaded on average in 2.06s and 1.94s on apache2 and lighttpd respectively. With caching switched on in WordPress (skipping PHP interpreter) the results were also similar: 1.03s to 0.96s. I decided to use proven configuration from router and switched back to lighty.

Comparing router to mini-ITX server improvement is significant. Loading blog article (caching off) took on router around 8 seconds while on server 1.3 second. Administration dashboard, PHP intense, proven even more boost – from 25 seconds on router to 2.5 second on server. Now writing articles withing admin console became delightful.

Router that has been discharged from heavy I/O intense operation may have installed more lightweight, wearing prone, storage. I plan to replace spinning HDD with MicroSD card in near future.

As the server is working 24×7 I have done some energy-saving measurements to understand average power consumption. Results are as follow:

- 12V/2.5A power adapter in idle – 2.9W

- mini-ITX powered off (WOL active) – 3.5W

- system running idle – 16W

- system processing PHP intense operations – 22W

- stress test (CPU, RAM, I/O on 100% load) – 24W

Comparing to router consuming 9W measured under stress test, the result is more than good. Now I assume router to take no more than 4W on average due to migrated services out of router. When HDD is replaced with SDHC card I expect to drop by another one watt. New router+server setup should take 27W on average, only 3 times more that router today. Yearly total cost of ownership (equals to power consumption) increased from 11 euro (78kWh/year) to 33 euro. One quick lunch skipped over the year 🙂